8 Minutes

8 Minutes

Natural Language Queries vs Conversational Analytics

Natural Language Queries is becoming all the rage in the BI and Analytics space today. Several BI tools, new and old, are stumbling over one another to incorporate natural language queries (sometimes called natural language search) into their product. This ranges from Thoughtspot’s SearchIQ to Tableau’s AskData to PowerBI to Microstrategy to Watson to Oracle Day-by-Day to Answer Rocket to many others.

Usually, these tools allow business users to input terms into a search box, or via voice. Some tools use keyword search, while others use some very basic natural language processing to interpret the terms. The hope is that it helps workers not accustomed to traditional, structured analytics and BI tools to find the information they need to make decisions.

Natural Language Queries, though, is an imperfect interface, and an imperfect solution to the problem of giving business users better access to data and insights. In fact, in the 2020 Hype Cycle, Gartner squarely puts Natural Language Query as “Sliding into the Trough”. In fact, the report highlights several disadvantages of tools that support natural language search, such as the need of indexing datasets and high costs associated with deploying these systems. The biggest drawbacks, we believe, are that “the language interpretations are too inaccurate, or the questions supported are too basic to be useful.”

We believe that fundamentally, natural language queries, by itself, is the wrong way to approach the problem of data exploration and insight discovery for non-technical users. While typing a few keywords is a good way to start a Google or Bing search on the web, it is not an ideal way to do an in-depth analysis of datasets to help make business decisions.

And this where, we believe, conversational analytics can make a difference. Conversational analytics takes a one-off natural language query and puts it in the context of a full-blown back-and-forth conversation.

In fact, that’s how humans communicate with one another today, either in 1-1 or in group settings, to exchange information and investigate some topics. Through Conversations!

For example, a lawyer doesn’t just emit a set of keywords and expect the witness to provide a well constructed answer. There’s a whole conversation that the lawyer and the witness engage in, for the lawyer to finally drill down to the one specific piece of information she is looking for.

A doctor doesn’t just pose a single question and magically expect a patient to explain their condition. They engage in a back and forth conversation where the doctor might keep asking more and more questions based on the responses of the patient, either digging deeper into some symptoms or exploring alternative conversational pathways.

When a clueless husband steps into a jewelry store to buy a gift for his spouse, he engages in a conversation with a sales associate to go from a vague notion of what he wants (to make his spouse happy) to a concrete action (buy a specific diamond necklace ). The conversation here is critical to help the husband arrive at an actionable decision.

When humans communicate with one another effectively through conversations, it makes sense that they can also communicate with bots for the purpose of data analysis through conversations. So, now the trick is to make sure the bot does a good job in responding to humans and engaging along in the conversation.

In this article, we’ll go through several key properties and advantages of Conversational Analytics.

Users ask questions the way they want

The whole point of a conversational analytics interface is that the user should be free to ask their questions the way they want to; and it is the job of the bot to understand and guide the user to a relevant and actionable insight. Just like the sales associate in the jewelry store helped guide the husband from a vague goal to a specific action, perhaps by asking clarifying questions or presenting various options, the bot must do the same.

The key point here is that users get to ask questions the way they want and express their needs using language and terms they are familiar with. They need not be constrained by how the data is stored in the database. It is the job of the bot to bridge the gap between the users and the raw data.

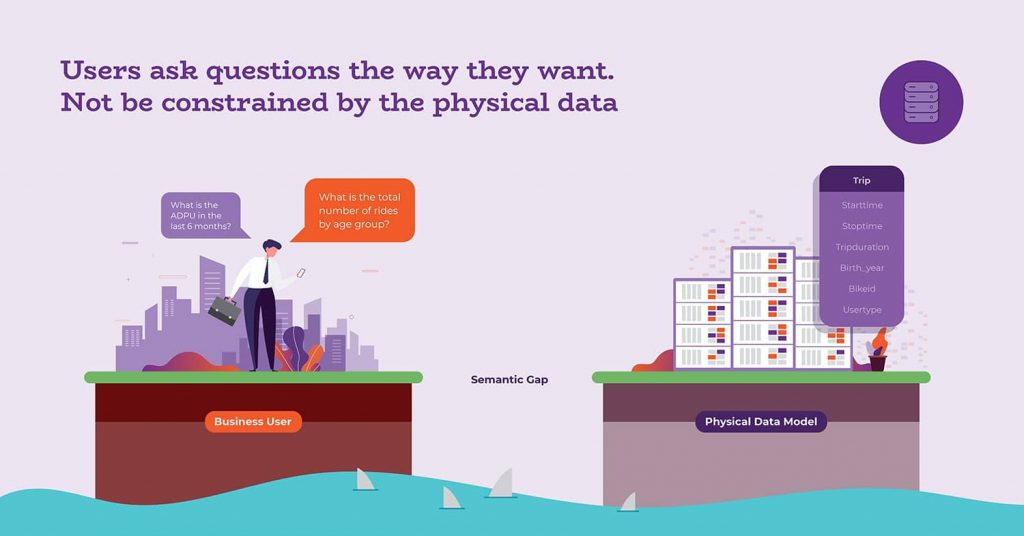

For example, let’s say that a user wants to ask questions related to a bikeshare dataset in New York City. they may want to ask questions like “What is the total number of rides by age group?”. However, the dataset may not use the term “ride” anywhere in the schema or in the raw data. It may not even have the term “age group”. Or perhaps, the user uses some industry-specific jargon (like ADPU, which stands for Average trip duration per user), and asks a question like “What is the ADPU in the last 6 months?”. Again, ADPU is not a concept that is present in the database.

We refer to this gap between the business user’s understanding of the world and the raw data that is present in a database as a “semantic gap”. This semantic gap must be bridged before the user’s query can be answered.

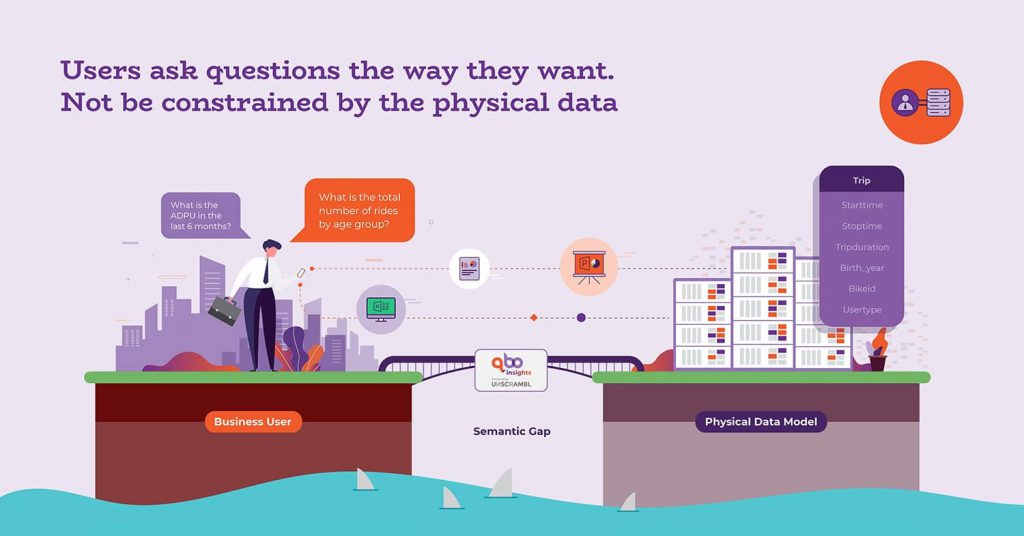

This is where the bot in a conversational analytics system comes in … it needs to bridge this semantic gap between the user and the physical data model.

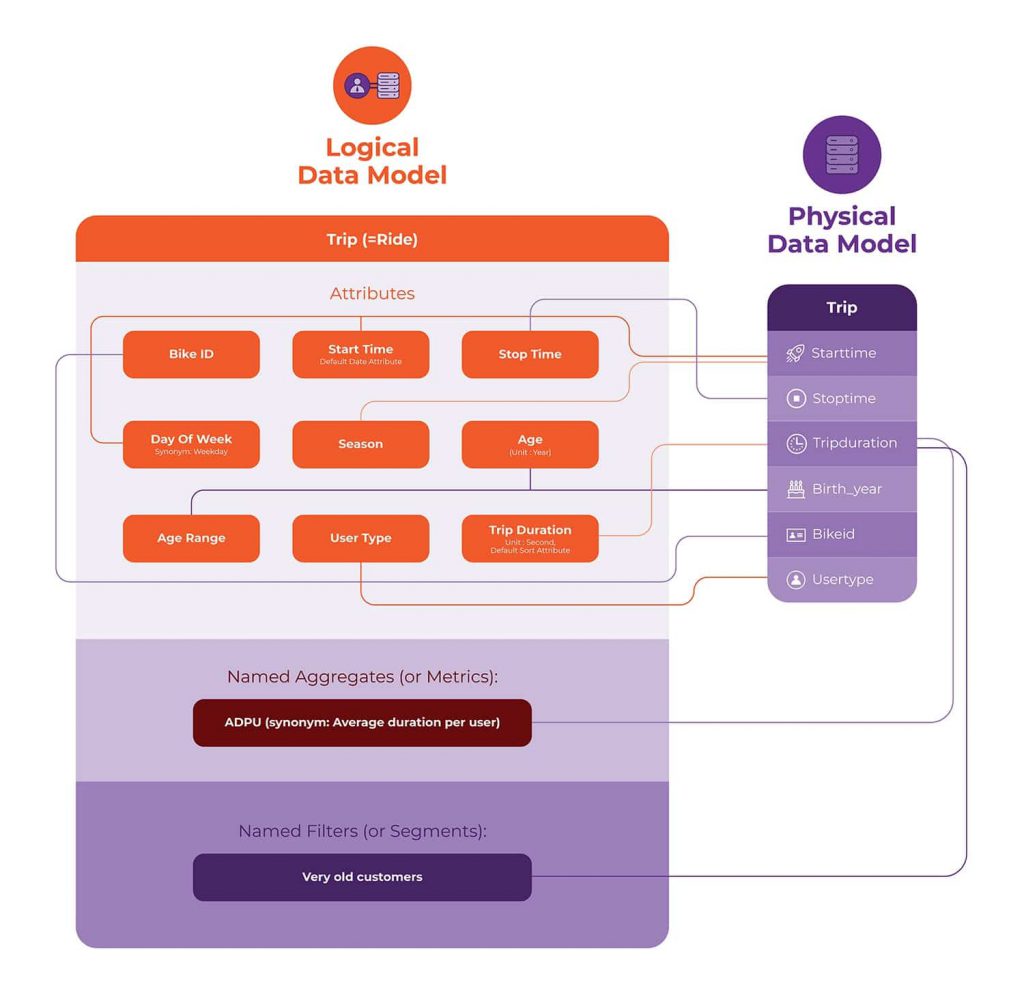

Bridging this gap requires some notion of a logical data model that lies closer to the business user’s understanding of the world. The logical data model can be mapped to the physical data model. For instance, it might define formulas for ADPU or ways of computing age and age groups from birth year. It might define synonyms for Trips. Refer here for a deeper dive into the logical data model that underpins Unscrambl’s Qbo.

Handling Ambiguities

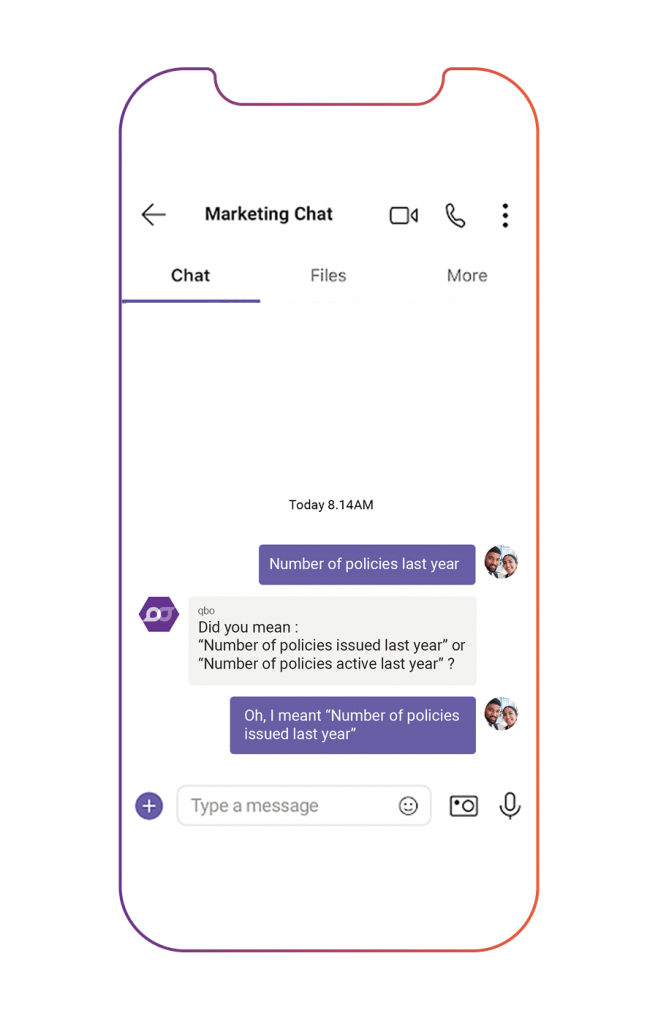

One of the challenges that arise when users ask questions the way they want is that there will be ambiguities. Users can ask poorly formed or ambiguous questions. And it is the job of the bot to help understand the possible interpretations of a given question, and then get clarifications from the user. It is the job of the bot, the personal data analyst, to try to guide the user to a concrete question that can be answered given the available data. And this can be done effectively through a conversation.

For example, sticking to the bikeshare data, a user might ask “Number of plans last year”. There can be several ambiguities here. The bot must be able to detect these ambiguities and come back with possible interpretations. It might say :

“Did you mean : 1.Number of new bikeshare plans that started last year 2.Number of bikeshare plans that were active any time last year" or “Number of plans last year”.

This way the user has a chance to think more about exactly what she wants and decide on one option (or possibly even get the answers to both interpretations).

Letting the Conversation “Flooooow”

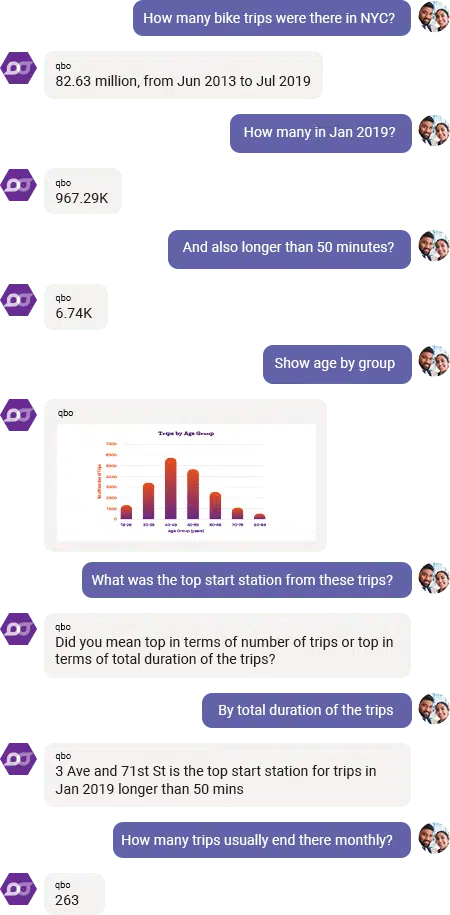

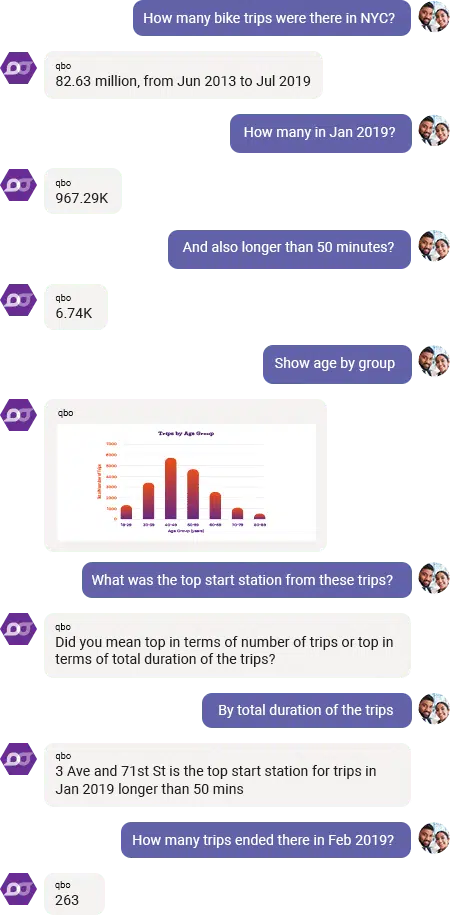

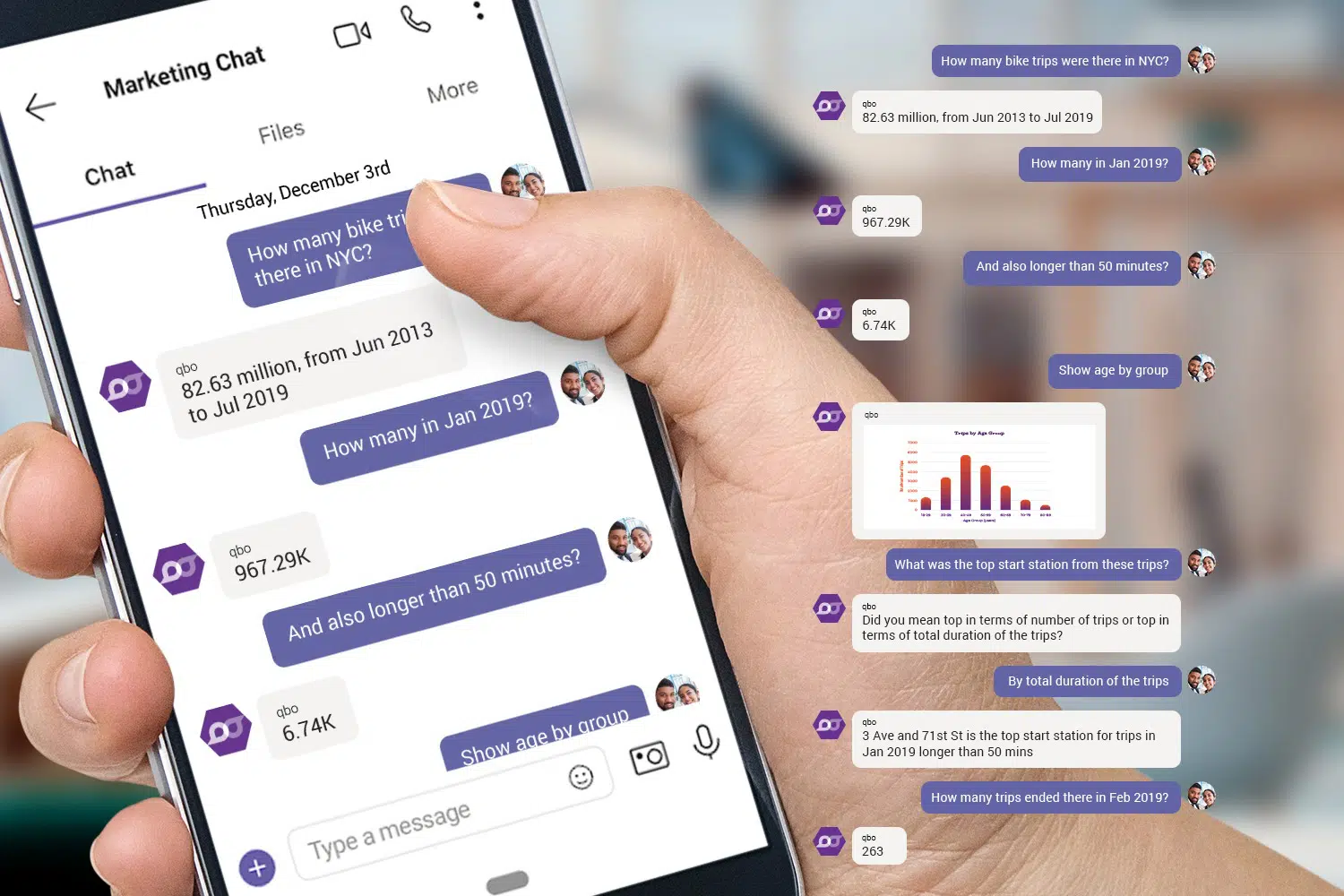

One of the unique and most powerful features of a conversation is that it can flow in so many different ways and undertake different paths. The important thing in the path the conversation takes is that, at every point, there is a certain context that is built up. And this context is us

ed to interpret all subsequent utterances.

A data exploration conversation that a user can have with a bot can also incorp

orate rich context. A user may start with a question like “How many bike trips were there in NYC?“, and after getting the answer, may then refine her previous question by asking “How many in Jan 2019?”. Now, this refinement question must be interpreted in the context of the previous question. This dramatically simplifies the interaction for the user.

There are several possible ways a data analysis conversation can floooow. The

user

may keep digging deeper into some aspect of the data to extract an insightful needle in the haystack. Or she may jump into a completely new thread of investigation based on some observation.

Keeping track of your analyses

One of the useful features of today’s technology-powered conversations is that there is always a transcript. When humans converse with one another on chat platforms like Facebook Messenger, WhatsApp, Skype, Google Chat, etc., there is a transcript of the conversation that they can always refer back to.

Similarly, any data analysis conversation with a bot leaves behind a transcript. And now this transcript can be referred back any time in the future. For example, if the user that had the previous conversation with the bot in the above Figure 5 suddenly, months later, scratches his head to figure out why he was ever interested in the station on “3 Ave & 71st St”, he can actually go back to that transcript and remember what sequence of questions led him to that station.

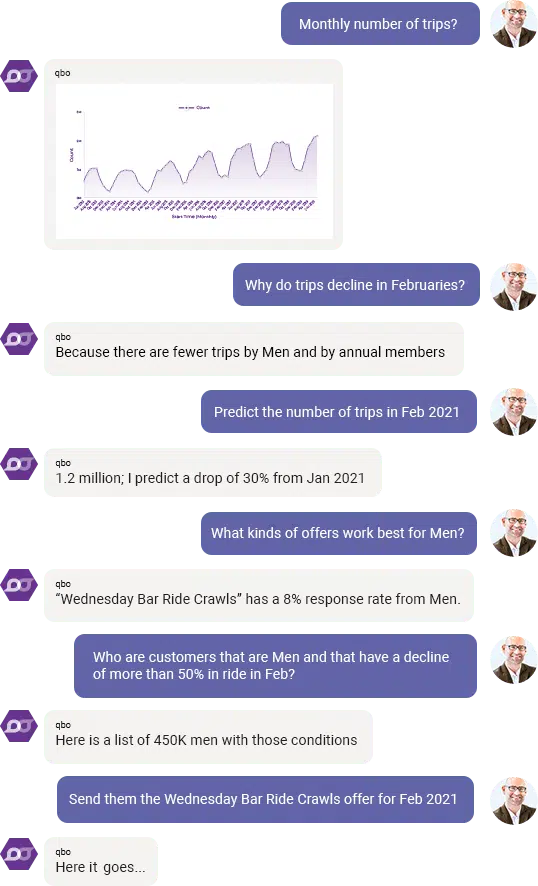

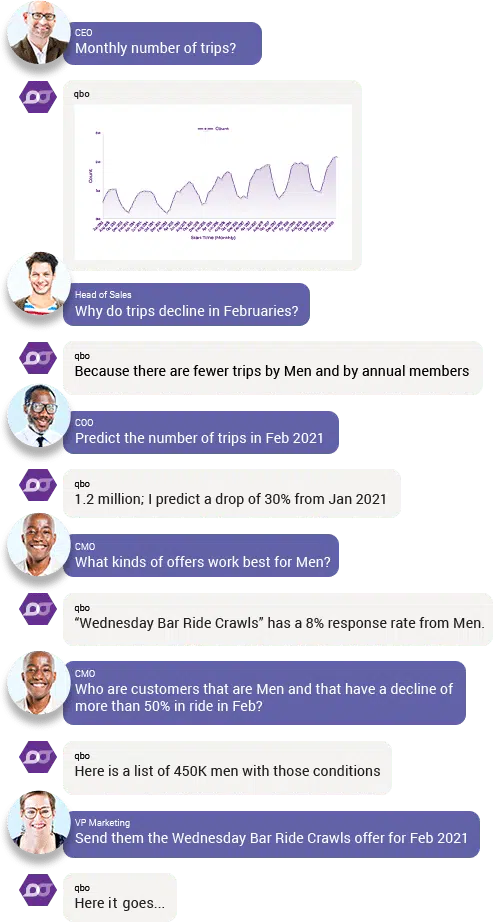

From What to Why to What Next to How

A common conversational pathway starts with What questions (what ha

ppened), moves on to Why questions (why did that happen), then What Next questions (what will

happen next if we did nothing), and finally How questions (how can we improve future outcomes). In the analytics spectrum, this spans the range from Descriptive to Diagnostic to Predictive to Prescriptive analytics.

We believe conversational analytics bots must also be able to navigate this space of questions. In the bikeshare example, this can go from :

“What is Monthly number of trips?” (Descriptive)

To

“Why do trips decline in February of every year”? (Diagnostic)

To

“Predict the number of trips in Feb 2021“ (Predictive)

To

“How can I increase trips taken this February 2021” (Prescriptive)”

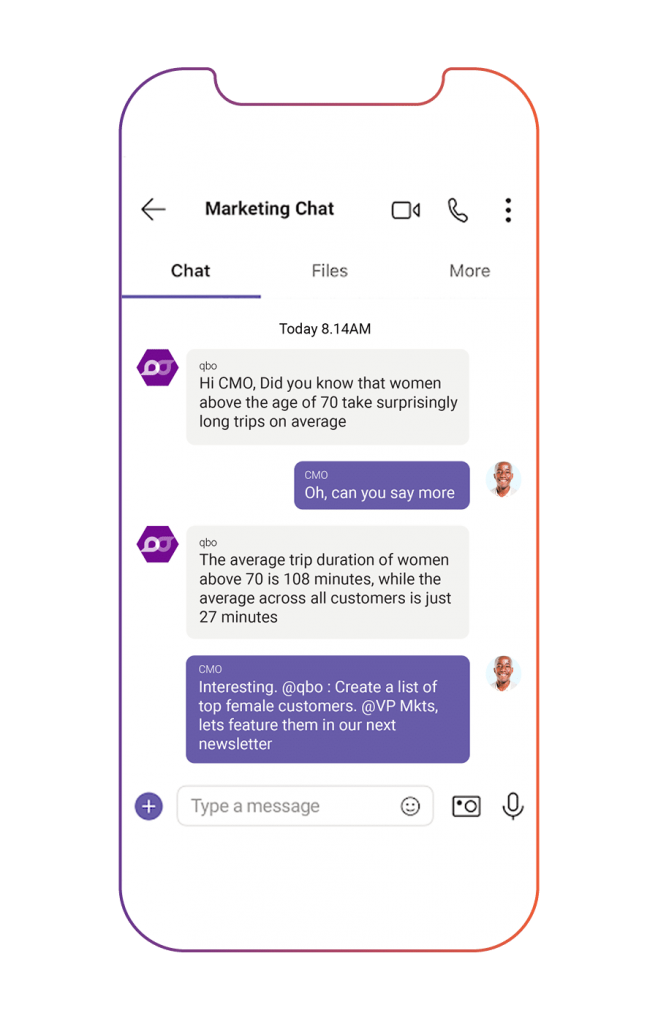

Group Conversation

Conversations between humans can be one-to-one or in a group. A group conversation adds more richness and

potential to a conversation. And the same holds true when one of the members in the group chat can be a data analysis bot.

Having a data analysis bot in a group conversation allows multiple people to collaborate while exploring data and making decisions. It even encourages data literacy by having everyone in a team look at how an expert might ask meaningful questions about the data. And again, a transcript of the data analysis is automatically available in case anyone wants to review the path the conversation took towards making a decision.

Machine Initiated Conversations

Finally, a most interesting feature of conversational analytics is that the human is not the one that needs to initiate the conversation! The bot can take the first step in engaging with a human when it feels there’s some benefit in informing the human of some fact or analysis. With repeated interactions, the bot can learn when and when not to initiate a conversation; and on what topics.

In summary, we, at Unscrambl, are just scratching the surface in terms of the power that conversational analytics can have in transforming user’s lives, both inside and outside enterprise settings. Join us on this magical adventure.